Base Model Overview

Below demonstrated are the version details of the selected base models with corresponding references provided.-

AlexNet: One of the earliest deep convolutional neural networks that demonstrated the effectiveness of CNNs on large-scale image classification tasks (ImageNet). It consists of five convolutional layers followed by three fully connected layers [1].

-

DenseNet-121: DenseNet introduces dense connections, where each layer receives inputs from all previous layers, promoting feature reuse and mitigating the vanishing-gradient problem. The "121" refers to the depth of the network [2].

-

EfficientNetV2-S: EfficientNetV2 improves upon EfficientNet by introducing faster training and better parameter efficiency. The "S" variant is a smaller version optimized for speed and resource efficiency [3].

-

GoogLeNet: Also known as InceptionV1, GoogLeNet introduced the Inception module, which uses multiple filter sizes within the same layer to capture multi-scale features efficiently. It significantly reduced computational cost compared to previous CNNs [4].

-

MobileNetV2: Designed for mobile and embedded vision applications, MobileNetV2 uses depthwise separable convolutions and introduces inverted residuals with linear bottlenecks, achieving high accuracy with low computational cost [5].

-

ResNet-34: ResNet introduced residual learning with skip connections, enabling the training of very deep neural networks. ResNet-34 is a mid-sized variant with 34 layers, balancing depth and computational efficiency [6].

-

ShuffleNet: ShuffleNet is optimized for computational efficiency on mobile devices. It introduces pointwise group convolution and channel shuffle operations to reduce computational cost while maintaining accuracy [7].

Model Performance

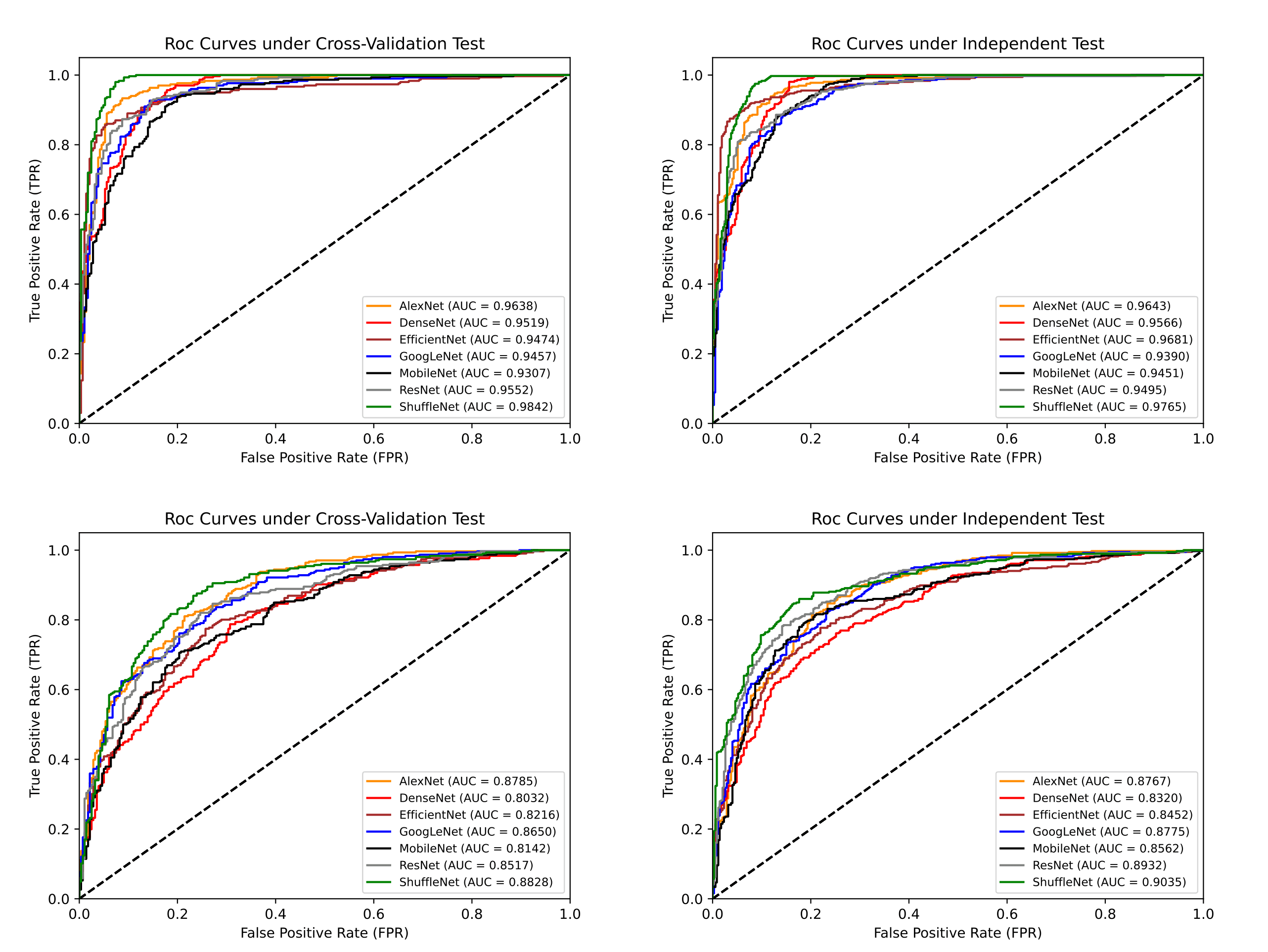

Below demonstrated are the ROC plots for base models trained on US images and generated SWE images from three public datasets ("BUSI" [8], "Breast-Lesions-USG" [9], and "BUS-BRA" [10]). The models trained with generated SWE images that achieved the top 3 AUC scores on the testing dataset were made available as selectable modes for users.

References

[1] A. Krizhevsky, I. Sutskever, and G. E. Hinton, "ImageNet Classification with Deep Convolutional Neural Networks," Communications of the ACM, vol. 60, no. 6, pp. 84–90, 2017. [Online]. Available: https://doi.org/10.1145/3065386

[2] G. Huang, Z. Liu, L. van der Maaten, and K. Q. Weinberger, "Densely Connected Convolutional Networks," in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2017, pp. 2261–2269. [Online]. Available: https://doi.org/10.1109/CVPR.2017.243

[3] M. Tan and Q. V. Le, "EfficientNetV2: Smaller Models and Faster Training," in Proc. Int. Conf. on Machine Learning (ICML), 2021, pp. 10096–10106. [Online]. Available: https://arxiv.org/abs/2104.00298

[4] C. Szegedy, W. Liu, Y. Jia, et al., "Going Deeper with Convolutions," in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2015, pp. 1–9. [Online]. Available: https://doi.org/10.1109/CVPR.2015.7298594

[5] M. Sandler, A. Howard, M. Zhu, A. Zhmoginov, and L.-C. Chen, "MobileNetV2: Inverted Residuals and Linear Bottlenecks," in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2018, pp. 4510–4520. [Online]. Available: https://doi.org/10.1109/CVPR.2018.00474

[6] K. He, X. Zhang, S. Ren, and J. Sun, "Deep Residual Learning for Image Recognition," in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2016, pp. 770–778. [Online]. Available: https://doi.org/10.1109/CVPR.2016.90

[7] X. Zhang, X. Zhou, M. Lin, and J. Sun, "ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices," in Proc. IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), 2018, pp. 6848–6856. [Online]. Available: https://doi.org/10.1109/CVPR.2018.00716

[8] W. Al-Dhabyani, M. Gomaa, H. Khaled, and A. Fahmy, "Dataset of breast ultrasound images," Data in Brief, vol. 28, 104863, 2020. [Online]. Available: https://doi.org/10.1016/j.dib.2019.104863 (BUSI)

[9] A. Pawłowska, P. Kopczyński, A. Fabijańska, et al., "A curated benchmark dataset for ultrasound-based breast lesion analysis," Scientific Data, vol. 11, 148, 2024. [Online]. Available: https://doi.org/10.1038/s41597-024-02984-z (Breast-Lesions-USG)

[10] W. Gómez-Flores, M. J. Gregorio-Calas, and W. C. de Albuquerque Pereira, "BUS-BRA: A Breast Ultrasound Dataset for Assessing Computer-aided Diagnosis Systems," Medical Physics, vol. 51, no. 4, pp. 3110–3123, 2024. [Online]. Available: https://doi.org/10.1002/mp.16812 (BUS-BRA)